rpc基础

这章的书籍部分(第八章)讲了非常多设计的演化

推荐都去看看

概念演化和设计思路

- 简单IPC

- 数据传递

- 基于共享内存的request/response模型,zero copy

- 基于OS内核: 两次拷贝

- L4 微内核系统:内存重映射(memory remapping)优化,两次缩到一次(以及它的问题)

- 通知机制:控制流转移

- 单向/双向通信:共享内存,管道,信号,套接字,…

- 同步/异步通信:阻塞还是不阻塞, 阻塞的话DoS和超时时间

- 双方/多方通信

- 直接/间接通信:

- 权限管理和安全问题(linux的结合fs权限检查,现代微内核的capability)

- 接收方的选择(进程?线程?如何保证命名服务,依赖文件系统还是全局标识符,全局标识符如何避免攻击?)

- linux的管道

- 基于共享内存的IPC

- L4 IPC

- 寄存器与虚拟寄存器传递的短消息

- 从内存重映射到共享内存的长消息

- 惰性调度的设计取舍

- 优点:在ipc阻塞暂时的情况下,只修改TCB减少队列操作,降低TLB miss等开销

- 缺点:增加调度系统复杂度,调度时间与当前ipc密度耦合,实时系统不适用

- 直接进程切换的设计取舍:缓存命中,加速控制流转移,代价是可能的优先级失效

- 通信连接:直接线程通信带来全局ID的安全性问题,后续系统更倾向于间接通信,采用capability权限系统

- LRPC的设计:与其传输数据不如传输代码(迁移线程模型),通过传递IPC服务进程的函数和页表,使得能够在本地进程和本地核上不通过核�间通信,将IPI变成syscall

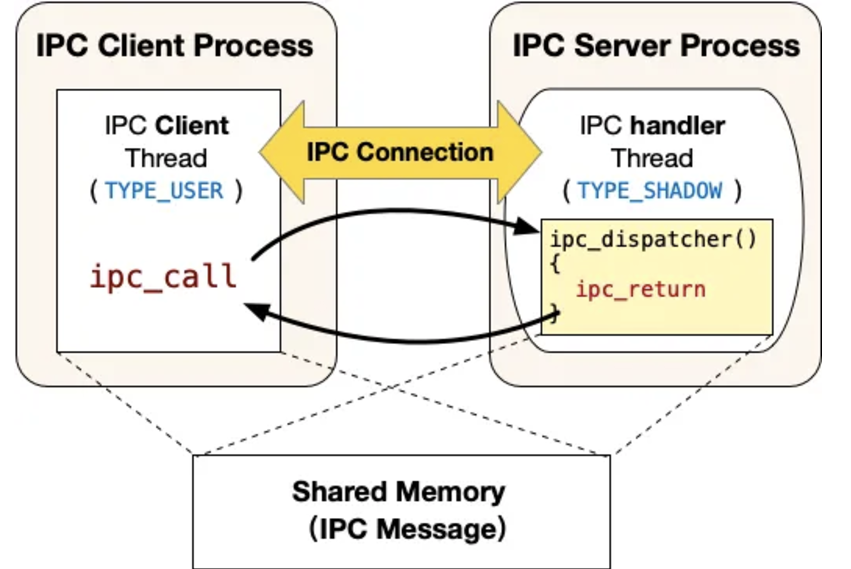

- Chcore的设计:类似L4和LRPC,server client注册服务后通过capability子系统完成conn的建立,之后进程间通信演变成syscall sys_ipc_call和sys_ipc_ret

- Android的设计 Binder IPC: 强依赖用户态服务

- context manager设计

- 线程池模型

- binder 句柄的传输设计:特殊数据 + offset避免扫描,可以传递文件描述符等

- 匿名共享内存Ashmem:解决mmap和system v+ipc key不够灵活的问题

chcore的ipc源码分析

先来看头文件里面是如何定义server_handler的

可以看用于通信的memory之中,头部的8个字节用于存储ipc response的header(实际上只有返回值),而server_handler会接受共享内存块的地址、长度、能力的个数和能力组ID

(忘了能力组了?参考 https://sjtu-ipads.github.io/OS-Course-Lab/Lab3/RTFSC.html)

// build/chcore-libc/include/uapi/ipc.h

// clang-format off

/**

* This structure would be placed at the front of shared memory

* of an IPC connection. So it should be 8 bytes aligned, letting

* any kinds of data structures following it can be properly aligned.

*

* This structure is written by IPC server, and read by client.

* IPC server should never read from it.

*

* Layout of shared memory is shown as follows:

* ┌───┬────────────────────────�──────────────────────┐

* │ │ │

* │ │ │

* │ │ custom data(defined by IPC protocol) │

* │ │ │

* │ │ │

* └─┬─┴──────────────────────────────────────────────┘

* │

* │

* ▼

* struct ipc_response_hdr

*/

// clang-format on

struct ipc_response_hdr {

unsigned int return_cap_num;

} __attribute__((aligned(8)));

#define SHM_PTR_TO_CUSTOM_DATA_PTR(shm_ptr) ((void *)((char *)(shm_ptr) + sizeof(struct ipc_response_hdr)))

/**

* @brief This type specifies the function signature that an IPC server

* should follow to be properly called by the kernel.

*

* @param shm_ptr: pointer to start address of IPC shared memory. Use

* SHM_PTR_TO_CUSTOM_DATA_PTR macro to convert it to concrete custom

* data pointer.

* @param max_data_len: length of IPC shared memory.

* @param send_cap_num: number of capabilites sent by client in this request.

* @param client_badge: badge of client.

*/

typedef void (*server_handler)(void *shm_ptr, unsigned int max_data_len, unsigned int send_cap_num, badge_t client_badge);

然后我们来看如何register server

// user/system-services/chcore-libc/libchcore/porting/overrides/src/chcore-port/ipc.c

/*

* Currently, a server thread can only invoke this interface once.

* But, a server can use another thread to register a new service.

*/

int ipc_register_server_with_destructor(server_handler server_handler,

void *(*client_register_handler)(void *),

server_destructor server_destructor)

{

cap_t register_cb_thread_cap;

int ret;

/*

* Create a passive thread for handling IPC registration.

* - run after a client wants to register

* - be responsible for initializing the ipc connection

*/

#define ARG_SET_BY_KERNEL 0

pthread_t handler_tid;

register_cb_thread_cap =

chcore_pthread_create_register_cb(&handler_tid,

NULL,

client_register_handler,

(void *)ARG_SET_BY_KERNEL);

BUG_ON(register_cb_thread_cap < 0);

/*

* Kernel will pass server_handler as the argument for the

* register_cb_thread.

*/

ret = usys_register_server((unsigned long)server_handler,

(unsigned long)register_cb_thread_cap,

(unsigned long)server_destructor);

if (ret != 0) {

printf("%s failed (retval is %d)\n", __func__, ret);

}

return ret;

}

核心是两个函数,

一是chcore_pthread_create_register_cb,创建执行注册的pthread线程, 将其插入kernel调度启的ready queue之中,并得到其能力组作为返回值

在函数之中还处理了很多dirty walk, 例如线程入口点模拟函数调用的arch ABI, 内核栈和tls的工作等等

二是usys_register_server,他只是执行一个syscall

int usys_register_server(unsigned long callback,

cap_t register_thread_cap,

unsigned long destructor)

{

return chcore_syscall3(CHCORE_SYS_register_server,

callback,

register_thread_cap,

destructor);

}

int sys_register_server(unsigned long ipc_routine, cap_t register_thread_cap,

unsigned long destructor)

{

return register_server(

current_thread, ipc_routine, register_thread_cap, destructor);

}

这个syscall实际调用的函数如下

server就是current_thread,也就是实现了类似LRPC优化的设计

随后调用ChCore提供的的系统调用:

sys_register_server。该系统调用实现在kernel/ipc/connection.c当中,该系统调用会分配并初始化一个struct ipc_server_config和一个struct ipc_server_register_cb_config。之后将调用者线程(即主线程)的general_ipc_config字段设置为创建的struct ipc_server_config,其中记录了注册回调线程和IPC服务线程的入口函数(即图中的ipc_dispatcher)。将注册回调线程的general_ipc_config字段设置为创建的struct ipc_server_register_cb_config,其中记录了注册回调线程的入口函数和用户态栈地址等信息。

/*

* Overall, a server thread that declares a serivce with this interface

* should specify:

* @ipc_routine (the real ipc service routine entry),

* @register_thread_cap (another server thread for handling client

* registration), and

* @destructor (one routine invoked when some connnection is closed).

*/

static int register_server(struct thread *server, unsigned long ipc_routine,

cap_t register_thread_cap, unsigned long destructor)

{

struct ipc_server_config *config;

struct thread *register_cb_thread;

struct ipc_server_register_cb_config *register_cb_config;

BUG_ON(server == NULL);

if (server->general_ipc_config != NULL) {

kdebug("A server thread can only invoke **register_server** once!\n");

return -EINVAL;

}

/*

* Check the passive thread in server for handling

* client registration.

*/

register_cb_thread =

obj_get(current_cap_group, register_thread_cap, TYPE_THREAD);

if (!register_cb_thread) {

kdebug("A register_cb_thread is required.\n");

return -ECAPBILITY;

}

if (register_cb_thread->thread_ctx->type != TYPE_REGISTER) {

kdebug("The register_cb_thread should be TYPE_REGISTER!\n");

obj_put(register_cb_thread);

return -EINVAL;

}

config = kmalloc(sizeof(*config));

if (!config) {

obj_put(register_cb_thread);

return -ENOMEM;

}

/*

* @ipc_routine will be the real ipc_routine_entry.

* No need to validate such address because the server just

* kill itself if the address is illegal.

*/

config->declared_ipc_routine_entry = ipc_routine;

/* Record the registration cb thread */

config->register_cb_thread = register_cb_thread;

register_cb_config = kmalloc(sizeof(*register_cb_config));

if (!register_cb_config) {

kfree(config);

obj_put(register_cb_thread);

return -ENOMEM;

}

register_cb_thread->general_ipc_config = register_cb_config;

/*

* This lock will be used to prevent concurrent client threads

* from registering.

* In other words, a register_cb_thread can only serve

* registration requests one-by-one.

*/

lock_init(®ister_cb_config->register_lock);

/* Record PC as well as the thread's initial stack (SP). */

register_cb_config->register_cb_entry =

arch_get_thread_next_ip(register_cb_thread);

register_cb_config->register_cb_stack =

arch_get_thread_stack(register_cb_thread);

register_cb_config->destructor = destructor;

obj_put(register_cb_thread);

#if defined(CHCORE_ARCH_AARCH64)

/* The following fence can ensure: the config related data,

* e.g., the register_lock, can been seen when

* server->general_ipc_config is set.

*/

smp_mb();

#else

/* TSO: the fence is not required. */

#endif

/*

* The last step: fill the general_ipc_config.

* This field is also treated as the whether the server thread

* declares an IPC service (or makes the service ready).

*/

server->general_ipc_config = config;

return 0;

}

之后是客户端建立连接

客户端创建对应共享内存,并分享给server handler

这里用PMO_DATA而不用PMO_SHM的原因是PMO_DATA没有lazy alloc,而针对ipc register这样的小内存场景,我们不需要lazy alloc

usys_create_pmo, usys_yield同理只是syscall的一个简单包装

然后客户端尝试发起注册系统调用

/*

* A client thread can register itself for multiple times.

*

* The returned ipc_struct_t is from heap,

* so the callee needs to free it.

*/

ipc_struct_t *ipc_register_client(cap_t server_thread_cap)

{

cap_t conn_cap;

ipc_struct_t *client_ipc_struct;

struct client_shm_config shm_config;

cap_t shm_cap;

client_ipc_struct = malloc(sizeof(ipc_struct_t));

if (client_ipc_struct == NULL) {

return NULL;

}

/*

* Before registering client on the server,

* the client allocates the shm (and shares it with

* the server later).

*

* Now we used PMO_DATA instead of PMO_SHM because:

* - SHM (IPC_PER_SHM_SIZE) only contains one page and

* PMO_DATA is thus more efficient.

*

* If the SHM becomes larger, we can use PMO_SHM instead.

* Both types are tested and can work well.

*/

// shm_cap = usys_create_pmo(IPC_PER_SHM_SIZE, PMO_SHM);

shm_cap = usys_create_pmo(IPC_PER_SHM_SIZE, PMO_DATA);

if (shm_cap < 0) {

printf("usys_create_pmo ret %d\n", shm_cap);

goto out_free_client_ipc_struct;

}

shm_config.shm_cap = shm_cap;

shm_config.shm_addr = chcore_alloc_vaddr(IPC_PER_SHM_SIZE); // 0x1000

// printf("%s: register_client with shm_addr 0x%lx\n",

// __func__, shm_config.shm_addr);

while (1) {

conn_cap = usys_register_client(server_thread_cap,

(unsigned long)&shm_config);

if (conn_cap == -EIPCRETRY) {

// printf("client: Try to connect again ...\n");

/* The server IPC may be not ready. */

usys_yield();

} else if (conn_cap < 0) {

printf("client: %s failed (return %d), server_thread_cap is %d\n",

__func__,

conn_cap,

server_thread_cap);

goto out_free_vaddr;

} else {

/* Success */

break;

}

}

client_ipc_struct->lock = 0;

client_ipc_struct->shared_buf = shm_config.shm_addr;

client_ipc_struct->shared_buf_len = IPC_PER_SHM_SIZE;

client_ipc_struct->conn_cap = conn_cap;

return client_ipc_struct;

out_free_vaddr:

usys_revoke_cap(shm_cap, false);

chcore_free_vaddr(shm_config.shm_addr, IPC_PER_SHM_SIZE);

out_free_client_ipc_struct:

free(client_ipc_struct);

return NULL;

}

int ipc_client_close_connection(ipc_struct_t *ipc_struct)

{

int ret;

while (1) {

ret = usys_ipc_close_connection(ipc_struct->conn_cap);

if (ret == -EAGAIN) {

usys_yield();

} else if (ret < 0) {

goto out;

} else {

break;

}

}

chcore_free_vaddr(ipc_struct->shared_buf, ipc_struct->shared_buf_len);

free(ipc_struct);

out:

return ret;

}

注册系统调用对应的实际函数如下

大体流程是

- 从当前thread 的cap_group里面找到传入的server_cap对应的slot,进而得到server线程控制块

- 从server获取它的ipc config,拿锁避免并发问题

- 检查client声明的共享内存地址没问题之后,拷贝到内核态,再给它去实际map共享内存

- 创建connection对象,并把cap给到server和client

- 设置好调用参数,栈寄存器,异常处理寄存器

- 然后调用sched切换控制权给注册的回调函数

- (也可以看到整个流程不涉及到IPI)

cap_t sys_register_client(cap_t server_cap, unsigned long shm_config_ptr)

{

struct thread *client;

struct thread *server;

/*

* No need to initialize actually.

* However, fbinfer will complain without zeroing because

* it cannot tell copy_from_user.

*/

struct client_shm_config shm_config = {0};

int r;

struct client_connection_result res;

struct ipc_server_config *server_config;

struct thread *register_cb_thread;

struct ipc_server_register_cb_config *register_cb_config;

client = current_thread;

server = obj_get(current_cap_group, server_cap, TYPE_THREAD);

if (!server) {

r = -ECAPBILITY;

goto out_fail;

}

server_config =

(struct ipc_server_config *)(server->general_ipc_config);

if (!server_config) {

r = -EIPCRETRY;

goto out_fail;

}

/*

* Locate the register_cb_thread first.

* And later, directly transfer the control flow to it

* for finishing the registration.

*

* The whole registration procedure:

* client thread -> server register_cb_thread -> client threrad

*/

register_cb_thread = server_config->register_cb_thread;

register_cb_config =

(struct ipc_server_register_cb_config

*)(register_cb_thread->general_ipc_config);

/* Acquiring register_lock: avoid concurrent client registration.

*

* Use try_lock instead of lock since the unlock operation is done by

* another thread and ChCore does not support mutex.

* Otherwise, dead lock may happen.

*/

if (try_lock(®ister_cb_config->register_lock) != 0) {

r = -EIPCRETRY;

goto out_fail;

}

/* Validate the user addresses before accessing them */

if (check_user_addr_range(shm_config_ptr, sizeof(shm_config) != 0)) {

r = -EINVAL;

goto out_fail_unlock;

}

r = copy_from_user((void *)&shm_config,

(void *)shm_config_ptr,

sizeof(shm_config));

if (r) {

r = -EINVAL;

goto out_fail_unlock;

}

/* Map the pmo of the shared memory */

r = map_pmo_in_current_cap_group(

shm_config.shm_cap, shm_config.shm_addr, VMR_READ | VMR_WRITE);

if (r != 0) {

goto out_fail_unlock;

}

/* Create the ipc_connection object */

r = create_connection(

client, server, shm_config.shm_cap, shm_config.shm_addr, &res);

if (r != 0) {

goto out_fail_unlock;

}

/* Record the connection cap of the client process */

register_cb_config->conn_cap_in_client = res.client_conn_cap;

register_cb_config->conn_cap_in_server = res.server_conn_cap;

/* Record the server_shm_cap for current connection */

register_cb_config->shm_cap_in_server = res.server_shm_cap;

/* Mark current_thread as TS_BLOCKING */

thread_set_ts_blocking(current_thread);

/* Set target thread SP/IP/arg */

arch_set_thread_stack(register_cb_thread,

register_cb_config->register_cb_stack);

arch_set_thread_next_ip(register_cb_thread,

register_cb_config->register_cb_entry);

arch_set_thread_arg0(register_cb_thread,

server_config->declared_ipc_routine_entry);

obj_put(server);

/* Pass the scheduling context */

register_cb_thread->thread_ctx->sc = current_thread->thread_ctx->sc;

/* On success: switch to the cb_thread of server */

sched_to_thread(register_cb_thread);

/* Never return */

BUG_ON(1);

out_fail_unlock:

unlock(®ister_cb_config->register_lock);

out_fail: /* Maybe EAGAIN */

if (server)

obj_put(server);

return r;

}

这个注册的回调函数就是server register时候设置的函数,也就是server线程传递的函数(代码),一般而言,这个函数采用默认值register_cb

#define DEFAULT_CLIENT_REGISTER_HANDLER register_cb

register_cb函数如下

该函数首先分配一个用来映射共享内存的虚拟地址,随后创建一个服务线程。

随后调用sys_ipc_register_cb_return系统调用进入内核,该系统调用将共享内存映射到刚才分配的虚拟地址上,补全struct ipc_connection内核对象中的一些元数据之后切换回客户端线程继续运行,客户端线程从ipc_register_client返回,完成IPC建立连接的过程。

/* A register_callback thread uses this to finish a registration */

void ipc_register_cb_return(cap_t server_thread_cap,

unsigned long server_thread_exit_routine,

unsigned long server_shm_addr)

{

usys_ipc_register_cb_return(

server_thread_cap, server_thread_exit_routine, server_shm_addr);

}

/* A register_callback thread is passive (never proactively run) */

void *register_cb(void *ipc_handler)

{

cap_t server_thread_cap = 0;

unsigned long shm_addr;

shm_addr = chcore_alloc_vaddr(IPC_PER_SHM_SIZE);

// printf("[server]: A new client comes in! ipc_handler: 0x%lx\n",

// ipc_handler);

/*

* Create a passive thread for serving IPC requests.

* Besides, reusing an existing thread is also supported.

*/

pthread_t handler_tid;

server_thread_cap = chcore_pthread_create_shadow(

&handler_tid, NULL, ipc_handler, (void *)NO_ARG);

BUG_ON(server_thread_cap < 0);

#ifndef CHCORE_ARCH_X86_64

ipc_register_cb_return(server_thread_cap,

(unsigned long)ipc_shadow_thread_exit_routine,

shm_addr);

#else

ipc_register_cb_return(

server_thread_cap,

(unsigned long)ipc_shadow_thread_exit_routine_naked,

shm_addr);

#endif

return NULL;

}

int sys_ipc_register_cb_return(cap_t server_handler_thread_cap,

unsigned long server_thread_exit_routine,

unsigned long server_shm_addr)

{

struct ipc_server_register_cb_config *config;

struct ipc_connection *conn;

struct thread *client_thread;

struct thread *ipc_server_handler_thread;

struct ipc_server_handler_config *handler_config;

int r = -ECAPBILITY;

config = (struct ipc_server_register_cb_config *)

current_thread->general_ipc_config;

if (!config)

goto out_fail;

conn = obj_get(

current_cap_group, config->conn_cap_in_server, TYPE_CONNECTION);

if (!conn)

goto out_fail;

/*

* @server_handler_thread_cap from server.

* Server uses this handler_thread to serve ipc requests.

*/

ipc_server_handler_thread = (struct thread *)obj_get(

current_cap_group, server_handler_thread_cap, TYPE_THREAD);

if (!ipc_server_handler_thread)

goto out_fail_put_conn;

/* Map the shm of the connection in server */

r = map_pmo_in_current_cap_group(config->shm_cap_in_server,

server_shm_addr,

VMR_READ | VMR_WRITE);

if (r != 0)

goto out_fail_put_thread;

/* Get the client_thread that issues this registration */

client_thread = conn->current_client_thread;

/*

* Set the return value (conn_cap) for the client here

* because the server has approved the registration.

*/

arch_set_thread_return(client_thread, config->conn_cap_in_client);

/*

* Initialize the ipc configuration for the handler_thread (begin)

*

* When the handler_config isn't NULL, it means this server handler

* thread has been initialized before. If so, skip the initialization.

* This will happen when a server uses one server handler thread for

* serving multiple client threads.

*/

if (!ipc_server_handler_thread->general_ipc_config) {

handler_config = (struct ipc_server_handler_config *)kmalloc(

sizeof(*handler_config));

if (!handler_config) {

r = -ENOMEM;

goto out_fail_put_thread;

}

ipc_server_handler_thread->general_ipc_config = handler_config;

lock_init(&handler_config->ipc_lock);

/*

* Record the initial PC & SP for the handler_thread.

* For serving each IPC, the handler_thread starts from the

* same PC and SP.

*/

handler_config->ipc_routine_entry =

arch_get_thread_next_ip(ipc_server_handler_thread);

handler_config->ipc_routine_stack =

arch_get_thread_stack(ipc_server_handler_thread);

handler_config->ipc_exit_routine_entry =

server_thread_exit_routine;

handler_config->destructor = config->destructor;

}

obj_put(ipc_server_handler_thread);

/* Initialize the ipc configuration for the handler_thread (end) */

/* Fill the server information in the IPC connection. */

conn->shm.server_shm_uaddr = server_shm_addr;

conn->server_handler_thread = ipc_server_handler_thread;

conn->state = CONN_VALID;

conn->current_client_thread = NULL;

conn->conn_cap_in_client = config->conn_cap_in_client;

conn->conn_cap_in_server = config->conn_cap_in_server;

obj_put(conn);

/*

* Return control flow (sched-context) back later.

* Set current_thread state to TS_WAITING again.

*/

thread_set_ts_waiting(current_thread);

unlock(&config->register_lock);

/* Register thread should not any more use the client's scheduling

* context. */

current_thread->thread_ctx->sc = NULL;

/* Finish the registration: switch to the original client_thread */

sched_to_thread(client_thread);

/* Nerver return */

out_fail_put_thread:

obj_put(ipc_server_handler_thread);

out_fail_put_conn:

obj_put(conn);

out_fail:

return r;

}

总结以上的流程:

- server端注册回调,指明当client端连接时应该调用函数f处理,创建了这个函数f相关的内核对象(如能力组,上下文等,这个函数实质上是一个不会被主动调度到的线程(因为没有sc调度上下文))

- client端注册,申请共享内存,指定调用参数,然后syscall, 内核校验后传递给f

- f处理并返回